| | |

|

StevenHwangPaper1 10 - 12 Dec 2008 - Main.StevenHwang

|

| |

| META TOPICPARENT | name="WebPreferences" |

|---|

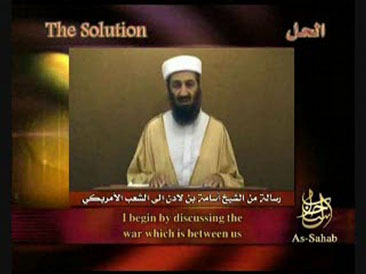

Legal Internet Use by Terrorists

| | | Steve, I posted a comment a few days ago, but for some reason it did not show up on the page. I don't remember exactly what I wrote (perhaps it is recoverable from the server?), but I will try again here. For the most part I agree with your paper. I do think that regulation would be mostly futile and could also possibly lead us to a slippery slope. Who is deciding what to censor? I do, however, think that clear attempts to instigate violence can be easily identified. But, as David writes above, there can certainly be gray areas. The beauty of an open forum website like youtube is that people can leave comments or post video responses to debunk hate speech, correct misinformation, etc. The problem is that video posters can choose to not allow comments and can block video responses. Do you think that a site like youtube could effectively deal with controversial videos (that might not be inherently violent) by mandating that all comments be allowed and all video responses be linked?

-- MarcRoitman - 10 Dec 2008 | |

>

> |

I have no idea why my comments aren't posting. Here is my response to John's and David's comments:

Thanks for the comments guys.

John, regarding the locality of the servers, I would think it would depend on how serious the threat--i.e. the ever-elusive concept of "imminence." I would say that if it were "the signal" for a coordinated attack or something like that, by all means we should block it to the extent we can. Even if we don't have access to foreign servers, we could block the incoming traffic a la China/Google.

As for imminence, I think we really have to decide it on a case-by-case basis, within certain guidelines. I think it absolutely does lead to difficulties, but such difficulties are not foreign to criminal justice or counterterrorism. That does not mean that it should be a blank slate, either. I think "imminent violence" as related to online content should be defined as violence that will take place in the future (especially near future) with a high degree of certainty, unless the content is removed.

As eluded to above, I think the trigger scenario (think "Relax" in Zoolander) is the clearest example of something that falls into this category. An angry person or group that says that America deserves to be bombed does not. Websites that recruit terrorists tread the line--on one hand, recruitment is not violence itself, but it might lead to violence in the future. I would say that for this scenario, we should not block general recruiting, but we should block recruiting that our other intelligence tells us is for the specific purpose of something big that will happen soon. A lot of the line-drawing really just depends on what other information we have, and how certain we are given all circumstances that taking down the content will save lives that may be lost if we don't.

David, regarding the cartoons--Thanks for bringing these up. I did not know about the situation, but it is very interesting question given my paper. As stupid as I think the cartoons are, I think that such speech should generally be protected absent an imminent threat. It's a complex question and a really slippery slope here. The violence was an unfortunate and unintended consequence of their actions. I think if they planned to repeat it or make a series out of it, that then it would be appropriate for the powers that be to have a talk with them and let them know that that's not a good idea. I feel like once you've done something that's already caused violence, you don't have the same liberties to do it again--you can't just claim non-intent if you know it will happen. But it looks like it was printed only once, and that beyond that it was other outlets that were reprinting it. The riots were sudden outbursts rather than planned attacks. The situations that I'm thinking of for taking down content is when you know that something will surely happen unless you take down the content--posting tasteless political cartoons does not fall into this category absent that knowledge.

With regard to your question about user and intent, I think that actual effect is always more important. For instance, I might protect the terrorists cartoons that are trying to start violence (but were failing miserably), but take down political cartoons if we knew they were definitely going to cause violence.

-- StevenHwang - 12 Dec 2008 | | |

| META FILEATTACHMENT | attachment="610x.jpg" attr="h" comment="" date="1227005072" name="610x.jpg" path="610x.jpg" size="39117" stream="610x.jpg" user="Main.StevenHwang" version="1" |

|---|

|

|

|

|

|

This site is powered by the TWiki collaboration platform.

All material on this collaboration platform is the property of the contributing authors.

All material marked as authored by Eben Moglen is available under the license terms CC-BY-SA version 4.

|

|

| | |